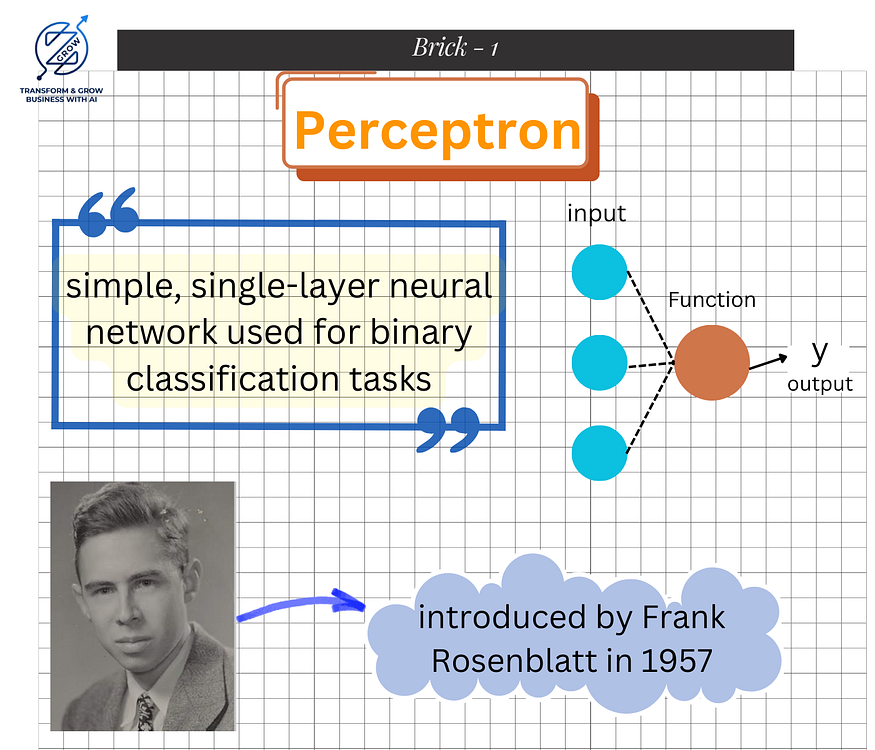

Welcome to Perceptron, part of our Neural Networks series.

When you think about ANN, RNN, CNN, FNN, GAN, LSTM, and so on, remember, that this is the base of all your ANN, so you can easily go through the slides with a realistic scenario of a perceptron. One of the simplest yet most fundamental artificial neural network architectures. If you’re just getting started with AI, understanding the perceptron is a great way to build your foundation in neural networks.

The perceptron is an algorithm for supervised learning of binary classifiers. It’s a type of linear classifier, i.e., a classification algorithm that makes its predictions based on a linear predictor function combining a set of weights with the feature vector.

The perceptron was introduced by Frank Rosenblatt in 1957. It represents the simplest type of feedforward neural network, consisting of a single layer of input nodes that are fully connected to a layer of output nodes. This basic structure makes it a powerful tool for understanding the principles behind more complex neural network models.

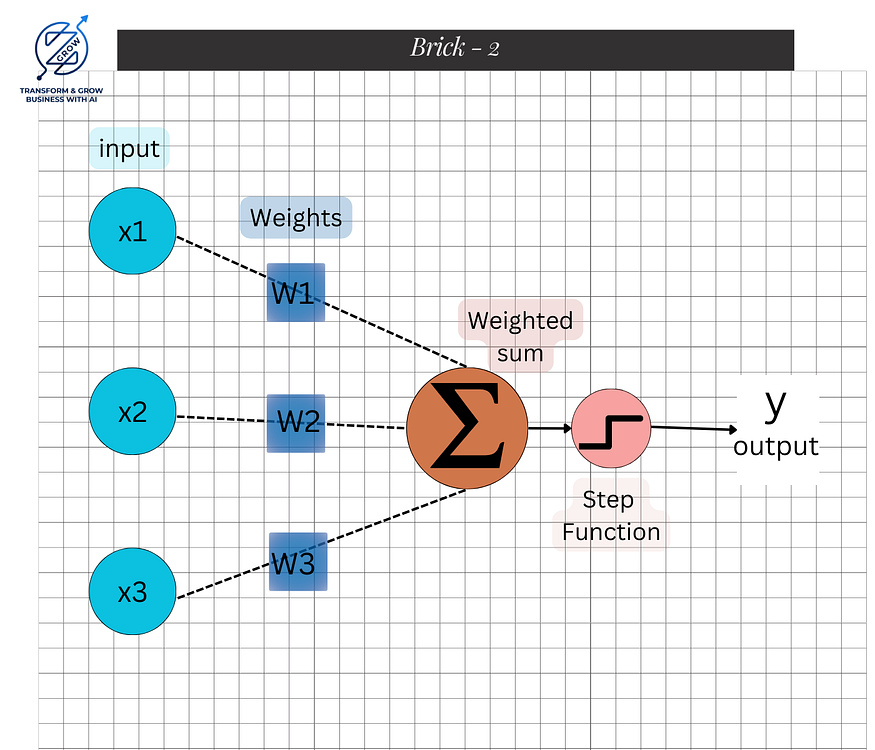

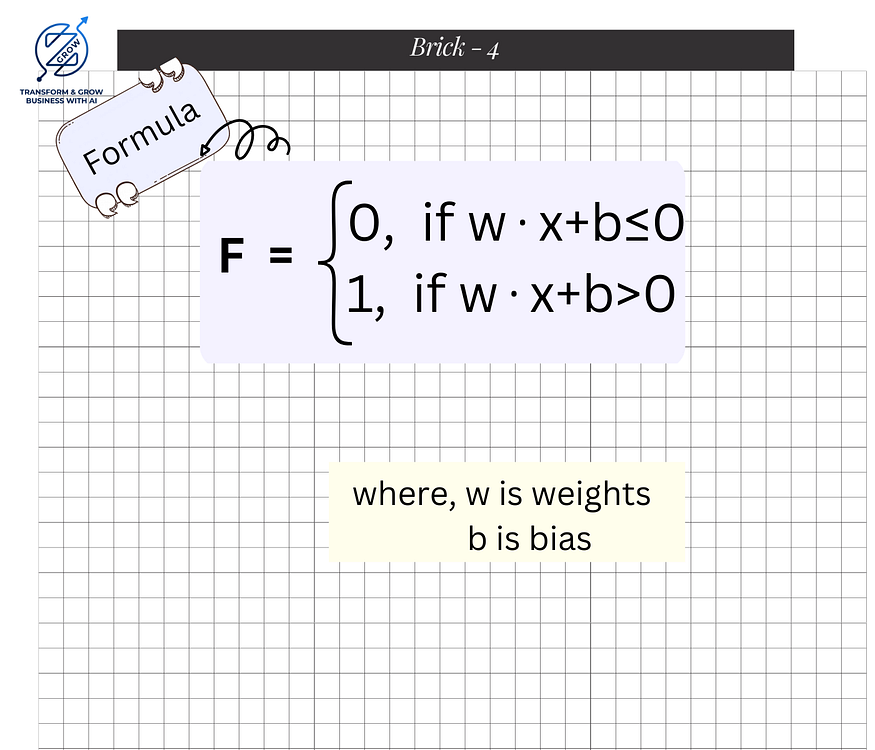

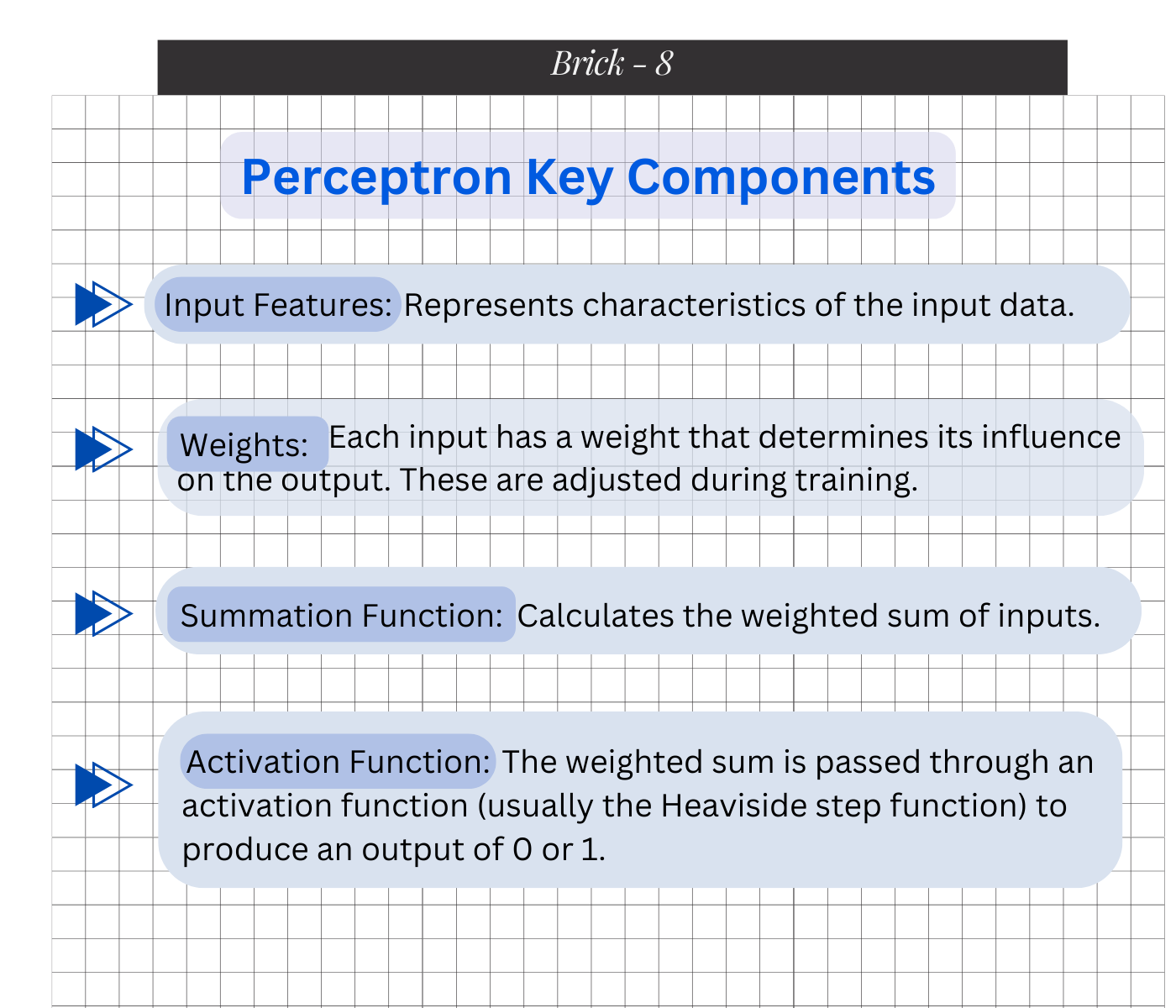

A perceptron consists of:

The perceptron learning rule is simple yet effective. It updates the weights in proportion to the error between the predicted output and the actual output: Δwi=η(y−y^)xi\Delta w_i = \eta (y — \hat{y}) x_iΔwi=η(y−y^)xi where η\etaη is the learning rate, yyy is the actual output, y^\hat{y}y^ is the predicted output, and xix_ixi is the input feature.

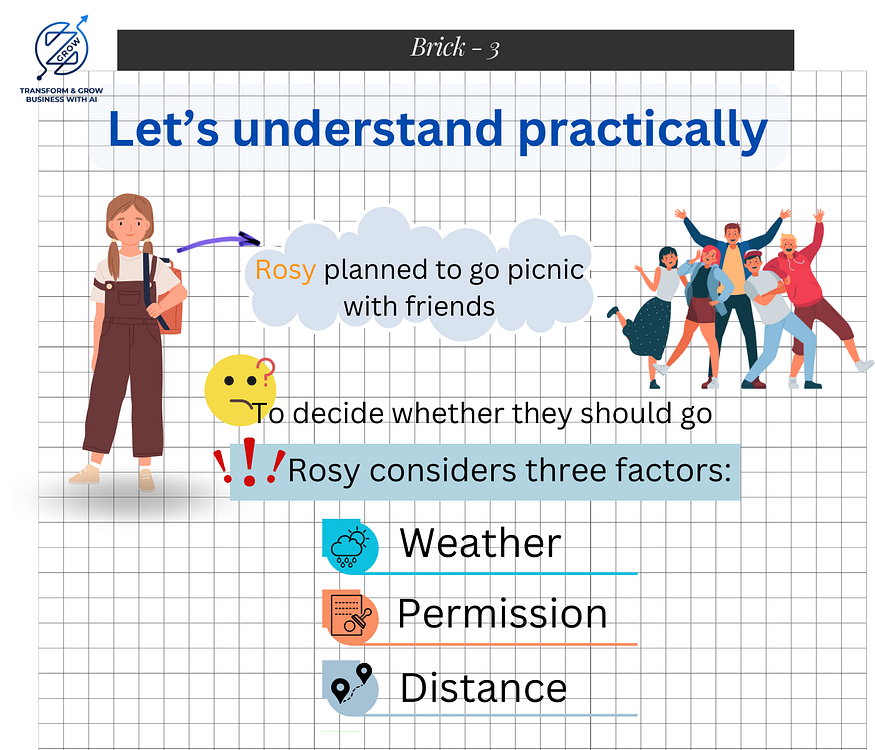

Meet Rosy, who is planning to go on a picnic with her friends. To decide whether they should go, Rosy considers several factors (features) before making a decision:

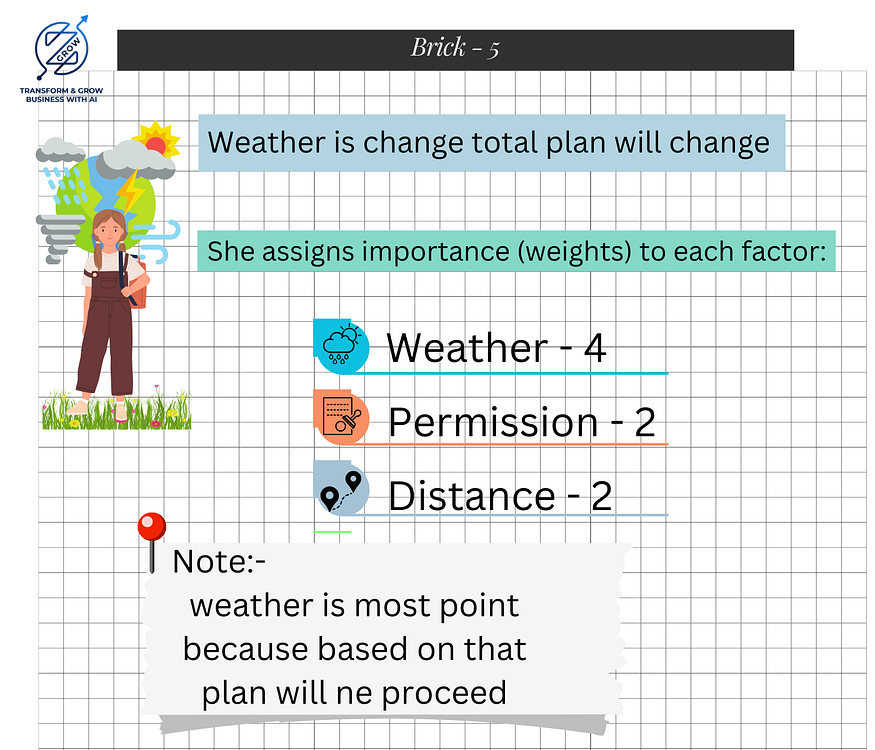

Rosy assigns importance (weights) to each factor:

The weather is the most critical factor, so it gets the highest weight. Here’s how Rosy makes her decision:

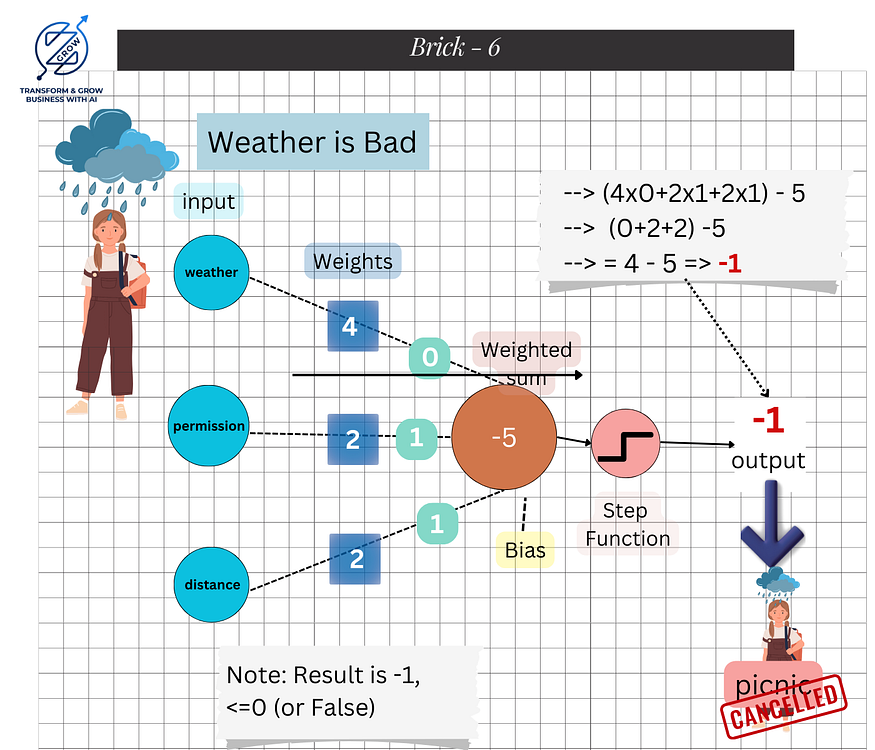

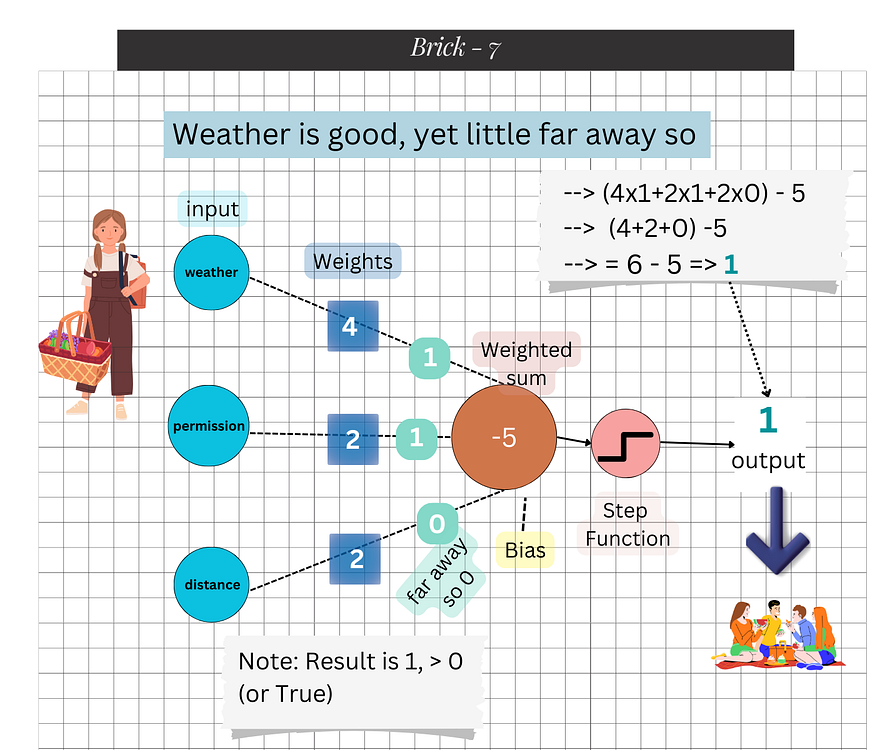

Let’s see how Rosy uses these factors to decide about the picnic:

Rosy also has a bias (threshold) of 5. To decide:

If the result is less than or equal to 0, they won’t go on the picnic. Since the result is -1, Rosy decides not to go on the picnic.

This story illustrates the basic idea behind a perceptron:

By understanding this simple story, you get the essence of how a perceptron works to make binary decisions.

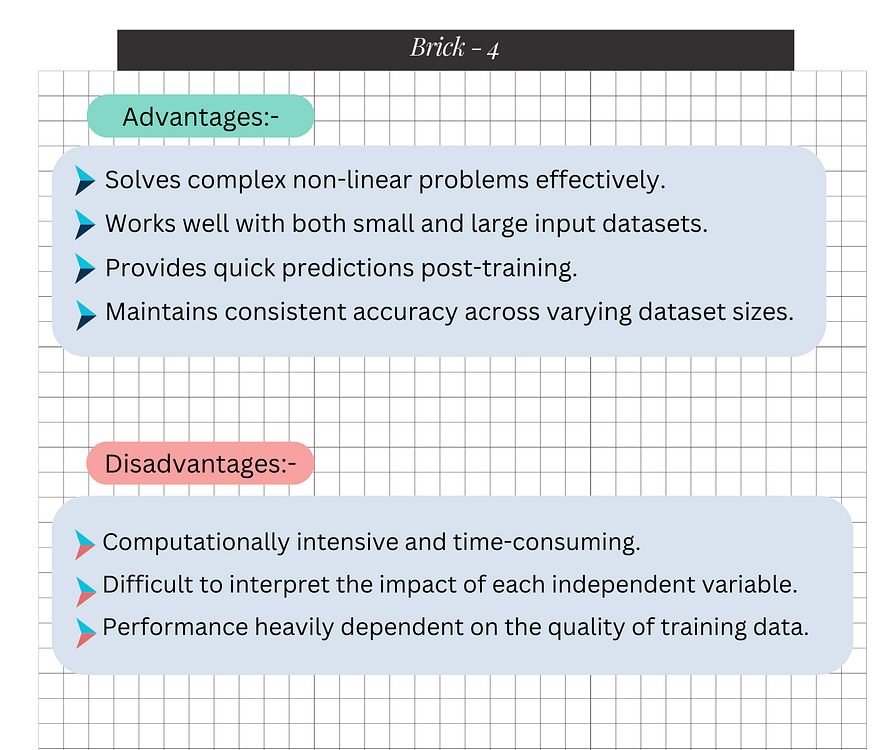

Despite its simplicity, the perceptron is powerful for solving linearly separable problems. It laid the groundwork for the development of more complex neural network architectures and algorithms. Some applications include:

While the perceptron is an important concept in neural network theory, it has its limitations. The most significant is that it can only solve problems that are linearly separable. This means it struggles with more complex, non-linear data.

The perceptron may be simple, but it’s a crucial stepping stone in the world of neural networks and AI. Understanding its workings helps in grasping more advanced concepts in neural networks and deep learning.

We hope this post provided you with a clear and concise overview of the perceptron. Stay tuned for our next post, where we will delve deeper into more complex neural network architectures.

No need to memorize words, just understand the concept.

Enjoy learning about perceptrons through our picnic trip example, and stay tuned for more!

Happy learning with building bricks.

Follow us for more insights!

Build the foundation with the next step of innovations.

#neuralnetwork #ArtificialIntelligence #GENAI #GenerativeAI #perceptron #Technology #Future #AITrends #Fundamentals #Algorithm